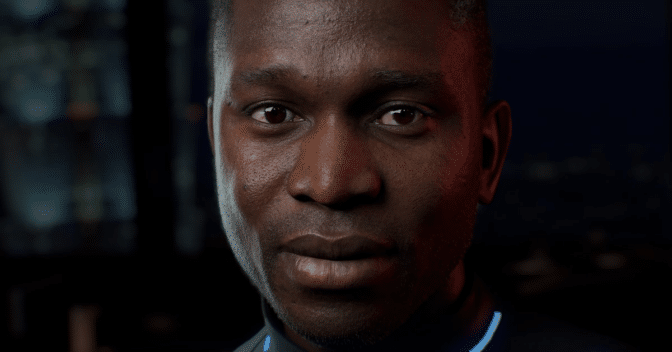

At Gamescom this week, NVIDIA introduced that NVIDIA ACE — a collection of applied sciences for bringing digital people to life with generative AI

At Gamescom this week, NVIDIA introduced that NVIDIA ACE — a collection of applied sciences for bringing digital people to life with generative AI — now contains the corporate’s first on-device small language mannequin (SLM), powered domestically by RTX AI.

The mannequin, known as Nemotron-4 4B Instruct, provides higher role-play, retrieval-augmented era, and function-calling capabilities so recreation characters can more intuitively comprehend participant directions, reply to avid gamers, and carry out more correct and related actions.

Accessible as an NVIDIA NIM microservice for cloud and on-device deployment by recreation builders, the dummy is optimized for low reminiscence utilization, providing quicker response occasions and offering builders a technique to make the most of over 100 million GeForce RTX-powered PCs and laptops and NVIDIA RTX-powered workstations.

The SLM Benefit

An AI mannequin’s accuracy and efficiency will depend on the scale and quality of the dataset used for coaching. Giant language models are educated on vast information; however, they are usually general-purpose and include extra data for many uses.

SLMs, then again, deal with particular use circumstances. So, even with much less knowledge, they can deliver more correct responses rapidly—essential components for conversing naturally with digital people.

Nemotron-4 4B was first distilled from the bigger Nemotron-4 15B LLM. This course requires the smaller mannequin, known as a “scholar,” to imitate the outputs of the bigger mannequin, appropriately known as an “instructor.” Throughout this course, noncritical outputs of the scholar mannequin are pruned or eliminated to scale back the parameter dimension of the dummy. Then, the SLM is quantized, which reduces the precision of the dummy’s weights.

With fewer parameters and less precision, Nemotron-4 4B has a decreased reminiscence footprint and quicker time to first token — how rapidly a response begins — than the bigger Nemotron-4 LLM, but sustaining an excessive accuracy stage due to distillation. Its smaller reminiscence footprint additionally means video games and apps that combine the NIM microservice can run domestically on extra of the GeForce RTX AI PCs and laptops and NVIDIA RTX AI workstations that buyers personally use right now.

This new, optimized SLM can be purpose-built with instruction tuning, a method for fine-tuning fashions on tutorial prompts to carry out particular duties. This may be seen in Mecha BREAK, an online game wherein gamers can converse with a mechanic recreation character and instruct it to change and customize mechs.

ACEs Up

ACE NIM microservices permit builders to deploy state-of-the-art generative AI fashions via the cloud or on RTX AI PCs and workstations to deliver AI to their video games and purposes. With ACE NIM microservices, non-playable characters (NPCs) can dynamically work together and converse with gamers within the recreation in actual time.

ACE comprises critical AI fashions for speech-to-text, language, text-to-speech, and facial animation. It’s additionally modular, permitting builders to choose the NIM microservice needed for every component of their explicit course.

NVIDIA Riva automated speech recognition (ASR) processes a consumer’s spoken language and uses AI to deliver a correct transcription in real time. The expertise builds customizable conversational AI pipelines utilizing GPU-accelerated multilingual speech and translation microservices. Other supported ASRs embody OpenAI’s Whisper, an open-source neural web that approaches human-level robustness and accuracy in English speech recognition.

Once translated to digital textual content, the transcription goes into an LLM—comparable to Google’s Gemma, Meta’s Llama 3, or now NVIDIA Nemotron-4 4B—to start producing a response to the consumer’s unique voice entry.

Subsequently, another piece of Riva’s expertise—text-to-speech—generates an audio response. ElevenLabs’ proprietary AI speech and voice expertise can be supported and demoed as part of ACE, as seen in the above demo.

Lastly, NVIDIA Audio2Face (A2F) generates facial expressions that may be synced to dialogue in many languages. With the microservice, digital avatars can show dynamic, practical feelings streamed or baked in throughout post-processing.

The AI community mechanically animates face, eyes, mouth, tongue, and head motions to match the chosen emotional range and stage of depth. A2F can mechanically infer emotion straight from an audio clip.

Lastly, the complete character or digital human is animated in a renderer, like Unreal Engine or the NVIDIA Omniverse platform.

AI That’s NIMble

Along with its modular help for varied NVIDIA-powered and third-party AI fashions, ACE permits builders to run inference for every mannequin within the cloud or domestically on RTX AI PCs and workstations.

The NVIDIA AI Inference Supervisor software program improvement package permits hybrid inference based on varied needs, such as expertise, workload, and costs. It streamlines AI mannequin deployment and integration for PC utility builders by preconfiguring the PC with the required AI fashions, engines, and dependencies. Apps and video games can then orchestrate inference seamlessly throughout a PC or workstation to the cloud.

ACE NIM microservices run locally on RTX AI PCs, workstations, and in the cloud. Microservices operating locally embody Audio2Face within the Covert Protocol tech demo, the new Nemotron-4 4B Instruct, and Whisper ASR in Mecha BREAK.

To Infinity and Past

Digital people go far past NPCs in video games. At last, at the month’s SIGGRAPH convention, NVIDIA previewed “James,” an interactive digital human who may interact with people using feelings, humor, and more. James relies on a customer-service workflow using ACE.

Adjustments in communication strategies between people and expertise over a long time finally led to the creation of digital people. The way forward for the human-computer interface is to have a pleasant face and require no bodily inputs.

Digital people drive extra partaking and pure interactions. According to Gartner, 80% of conversational choices will embed generative AI by 2025, and 75% of customer-facing purposes can use conversational AI with emotion. Digital people will remodel several industries and use past gaming, customer support, healthcare, retail, telepresence, and robotics circumstances.

Share this content:

COMMENTS